Headlines continue to be dominated by news of widespread layoffs in technology, finance and other sectors. Many companies reducing their workforces cite reasons such as overzealous hiring during the pandemic, an uncertain economy and lower-than expected revenues.

Recent research by Capterra, a software review company in Arlington, Va., suggests that while the rationales for those layoffs may have varied, there was likely a common denominator: reliance on algorithms and HR software to determine who got to stay and who was shown the door.

Capterra found that 98 percent of HR leaders said they would rely on algorithms and software to determine layoffs, if needed, in 2023. More than one-third said they would rely solely on data fed into algorithms to come up with recommendations to reduce labor costs in a recession.

Rise in Data-Driven Decision-Making

The use of AI-powered analytics to make downsizing decisions reflects the continuation of a trend that began more than a decade ago. A push from the C-suite to become more data-driven, coupled with the emergence of sophisticated people analytics software, has made HR much more tech-enabled. HR industry analysts say this has led to the growing use of next-generation tools that can crunch vast amounts of data from different HR systems to generate recommendations and insights.

Yet whether the use of algorithms to make layoff decisions is a sound practice has sparked debate in the HR community. While some applaud it as a long-overdue use of more objective and quantitative data, others caution that the limitations of algorithms can lead to biased layoff decisions.

The rapid adoption by organizations of ChatGPT and other generative AI tools to automate tasks such as writing e-mails, crafting computer code and creating job descriptions is another sign to some that the use of algorithms has achieved new value and acceptance in the workplace. For skeptics, however, the inaccuracies and canned responses that ChatGPT can generate raise red flags about an overreliance on algorithms to drive critical workforce decisions or create key messaging.

The Capterra study found that when organizations want layoff recommendations based on performance rather than on job role, they typically feed four primary types of data into algorithms: skills data, performance data, work status data (e.g., full-time, part-time or contractor), and attendance data. The software analyzes that information and follows guidelines provided by HR and other functions to determine how many employees should be laid off in given areas.

One surprise from the Capterra research, according to some experts, is that once-popular “flight-risk data”—predictive analytics that forecast which employees are most likely to leave a company by evaluating such metrics as time since last promotion, performance reviews, pay level and more—ranked at the very bottom of data types most often used to make layoff decisions.

“It may be an indication that flight-risk metrics have fallen out of favor,” says Brian Westfall, a principal HR analyst at Capterra. “It’s interesting, because you’d think if you had reliable analytics showing someone might be thinking of leaving the company anyway, those people could be made top candidates for layoff before cutting other employees.”

Algorithms Grow More Sophisticated

Westfall says algorithms used for performance-based layoff decisions are typically part of third-party vendors’ software platforms. He maintains that when properly tested for bias and proven to be reliable and valid, many of these algorithms can now instill greater trust among human resource professionals when it comes to making accurate and fair recommendations.

“The algorithms have improved to a point where you often don’t need a data scientist in the HR department to analyze data,” Westfall says. “Being more data-driven in decision-making is largely a positive for HR, because I don’t think any organization wants to return to the flawed practices of the past, like last-in, first-out kind of layoff decisions.”

David Brodeur-Johnson, employee experience research lead with Cambridge, Mass.-based research and advisory firm Forrester, says using recommendations produced by algorithms has its place—as long as those outputs are applied with the appropriate amount of human review or interpretation.

“Using well-tested and validated algorithms allows you to get insights at scale about employee performance that are difficult to get any other way,” Brodeur-Johnson says. “The problem can be that a worker’s value, or what they actually contribute to an organization’s success, may not be represented in the metrics the algorithm is using for its recommendations. HR leaders also need to have a good understanding of what algorithms can’t tell you when making layoff decisions based on performance.”

For example, Brodeur-Johnson says there may be a seasoned employee working in a call center who’s one of the few in the unit capable of resolving complex customer problems.

“Metrics used to measure performance that are fed into an algorithm may show that employee’s call volume is lower than others and the time spent on each call is somewhat longer than what’s expected,” he says. “But because of that person’s ability to successfully resolve problems, they’re why so many customers say in surveys they’ll continue to do business with the company.”

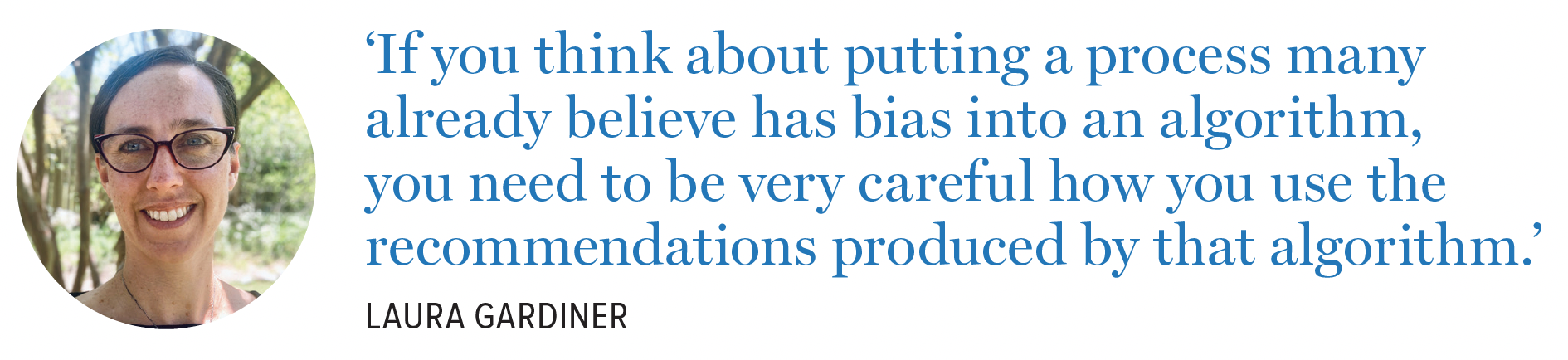

Laura Gardiner, a Memphis, Tenn.-based director analyst in Gartner’s HR practice, agrees that there are positives to HR using more quantitative data to make layoff or labor cost-reduction decisions—but with an important caveat.

“That approach requires that the data going into the algorithm and the processes used to collect that data are without bias, accurate and represent relevant criteria in terms of evaluating someone’s performance,” she says. “That’s not always a given.”

To that end, Gardiner cites research showing an ongoing lack of confidence in the performance management process used in many organizations. A recent Gartner study found that 62 percent of HR business partners believe their companies’ performance management processes are susceptible to bias.

“If you think about putting a process many already believe has bias into an algorithm, you need to be very careful how you use the recommendations produced by that algorithm,” Gardiner says.

Get to Know Your Vendor’s Algorithms

Most algorithms used by organizations to guide layoff decisions are part of technology vendors’ software platforms, rather than developed in-house. HR industry analysts say that means HR should apply an extra level of scrutiny to those vendors’ algorithms to ensure they’re reliable, valid, privacy-conscious and transparent.

For starters, HR leaders should ask vendors about their bias-testing practices and whether an independent third party has audited the algorithms. Such audits should ideally occur at regular intervals throughout the year. Bringing in IT specialists and attorneys can also help HR assess vendors’ AI tools.

“I think the cautions in using either externally or internally created algorithms are the same, because even if you’re building that algorithm in-house, it’s usually not the person creating it who truly understands its data sources,” says Laura Gardiner, a Memphis, Tenn.-based director analyst in Gartner’s HR practice.

“The concern overall should be, do you really know what’s being fed into the algorithm and have you tested the outcomes?” she says. “If an organization feels it has fully validated the outcomes of the algorithm and it has quality data going in, then the concerns will be fewer.”

Brian Westfall, a principal HR analyst at Capterra, a software review company in Arlington, Va., advises HR leaders to make sure they have a crystal-clear understanding of how a vendor’s algorithms work. Some vendors still prevent users from “peering behind the curtain” to get details of how the AI truly functions, allegedly to protect intellectual property or to simplify the process for HR professionals who may not be tech-savvy.

At a minimum, HR analysts and legal experts say, vendors should ensure—and HR should verify—that protected employee data such as race, gender, disability or age isn’t being fed into algorithms to make layoff decisions.

But far more due diligence is encouraged.

“You want to know what data points the algorithms look at, how they are weighted and how the algorithm actually works in making recommendations,” Westfall says. “If you’re just buying a software product off the shelf that advertises slick predictive analytics and promises to deliver accurate recommendations without doing your homework, you risk investing in something that can create biased or flawed decisions.” —D.Z.

Bring a Critical Eye

Westfall says HR leaders should apply a healthy skepticism when using algorithms to make performance-based downsizing decisions. “They need a good understanding of the biases that can influence the data and the process,” he says.

For example, Capterra’s findings stressed that relying too heavily on algorithms to make layoff decisions could cause decision-makers to miss factors such as whether employees have a poor or biased manager, whether they lack adequate resources or support to work effectively, and whether the software was tracking the right metrics to accurately gauge performance.

Only 50 percent of the HR leaders surveyed by Capterra were “completely confident” that algorithms or HR software would make unbiased recommendations, and less than half were comfortable with making layoff decisions based primarily on that technology.

“There’s a dichotomy where organizations want to rely more on objective performance data to make layoff decisions, but they also understand the process that’s generating their performance data can be flawed,” Westfall says.

Uncovering Hidden Value

Gardiner says employers should exercise caution when using certain data, such as employee skills, to make layoff recommendations—which almost two-thirds of respondents in the Capterra study reported doing.

“The algorithms used typically don’t monitor how those skills are being collected and placed into a skills database or how they’re validated,” Gardiner says. “Does the dataset being used have all of an employee’s updated skills on file? These are the types of questions you need to ask.”

She says algorithms also have limitations because they can’t apply true “human context” to workforce reduction recommendations.

“Say you have an all-star employee who’s done fantastic work for a decade but who is currently going through some sort of temporary personal crisis,” Gardiner says. “Depending on how you select and use performance data, an algorithm might recommend laying that person off based only on recent performance. That’s why human context and observation should always be part of these decisions.”

Performance data used in many algorithms also doesn’t factor in intangibles. Employees who volunteer to train co-workers, for example, help to build and sustain a positive workplace culture. “Someone observing on the ground would see that value, but if that kind of data isn’t being captured in an algorithm, it won’t be factored into a layoff recommendation,” Gardiner says.

Brodeur-Johnson recommends broadening the type of data used to make merit-based layoff decisions beyond single manager evaluations to include tools such as 360-degree surveys that feature peer reviews. “You want a more complete picture of the circumstances employees are working under,” he says.

The Right Mix of Data and Instinct

Gardiner believes organizations should seek a balance of algorithmic and human data points when making decisions that have such a big impact on people’s lives.

“There should always be a balance of manager or peer evaluation with system-generated data,” she says. “What that balance is will depend on the quality of your data and the quality of your management. Has the organization invested heavily in manager training and retraining around performance management? Is your data reliable, mature and validated?”

Ben Eubanks is chief research officer at Lighthouse Research, an HR advisory and research firm in Huntsville, Ala., and the author of Artificial Intelligence for HR (Kogan Page, 2018). He says it’s important to remember that algorithms aren’t the only source of biased recommendations. Humans have long been guilty of making biased hiring or firing decisions, and AI is trained on large datasets based on historic human choices.

“There’s always some bias in our decisions as humans,” Eubanks says. “But if there’s an awareness of that problem and organizations can combine human judgment with what’s ideally more unbiased data around employee performance or skills generated by algorithms, it creates better outcomes.”

Dave Zielinski is a freelance business journalist in Minneapolis.

illustration by Michael Korfhage.